The Little Book of Deep Learning

This book is a short introduction to deep learning for readers with

a STEM background, originally designed to be read on a phone

screen. This is the v1.2 updated on May

19th, 2024.

It is distributed under a non-commercial Creative Commons license

and was downloaded

600'000 times in a

bit more than a year.

Download the phone-formatted pdf

Buy a

$9 paperback copy on lulu.com

Download a printable A5 booklet pdf

Note that the version sold on Amazon and other on-line platforms

for $40 is "unauthorized": someone stole the content and sells

it. Preventing this would require to sue and I have better things to

do with my time.

Updates

V1.2 (May 19, 2024)

- Chapter 8. New chapter on low-resource methods (prompt

engineering, quantization, low-rank adapters, model merging).

- Miscellaneous. Changed "meta parameter" to "hyper

parameter".

- Section 3.6. Added a sub-section about fine-tuning.

- Section 4.8. Added the note about the quadratic cost of

the attention operator

- The missing bits. Added a note about the O(T) of standard

RNNs vs. the O(log T) of methods that leverage parallel scan.

V1.1.1 (Sep 20, 2023)

- Section 4.2. Added a paragraph about the equivariance of

convolution layers.

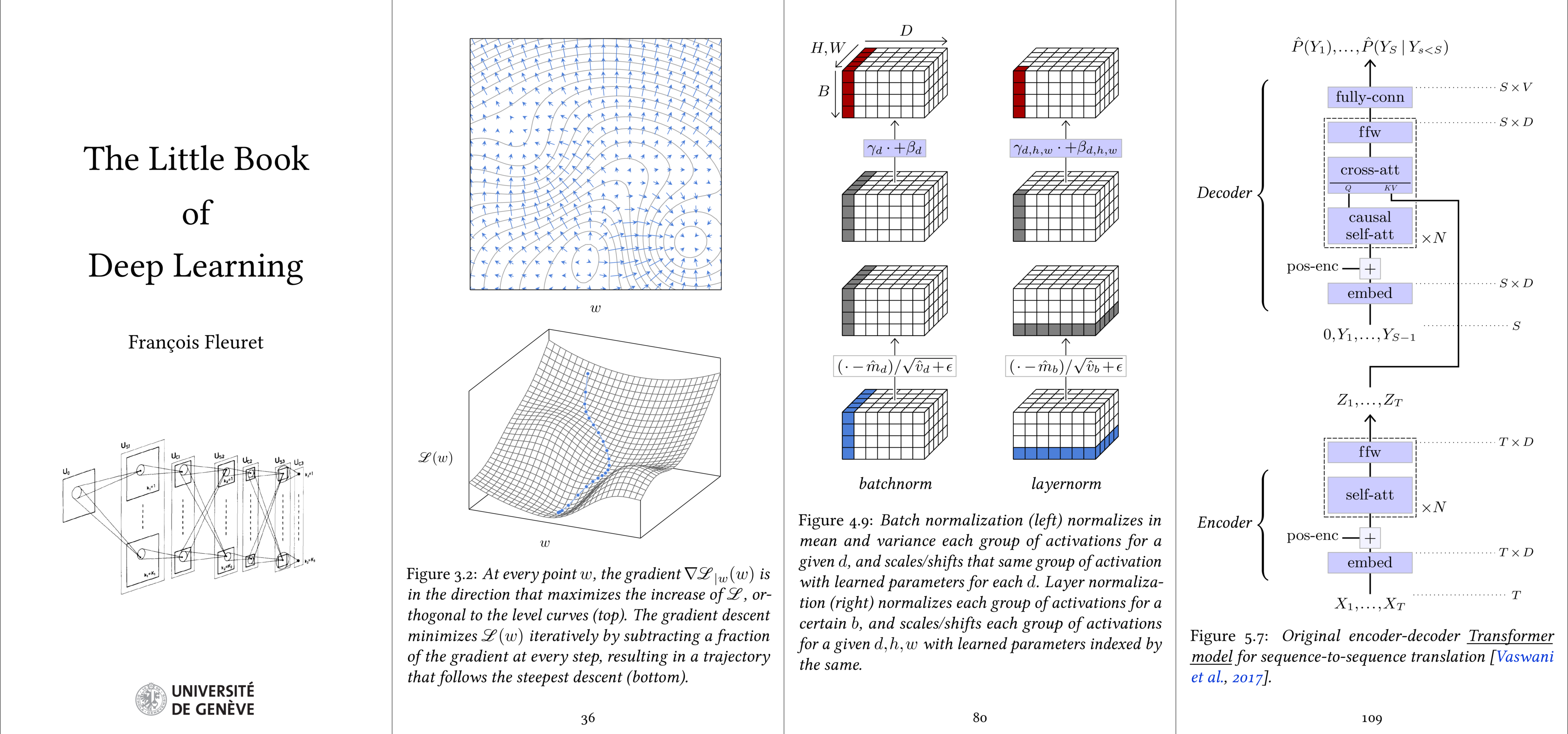

- Section 5.3. Fixed the description of the original

Transformer, and modified Figures 5.6, 5.7, 5.8, and 5.9

accordingly.

V1.1 (Sep 8, 2023)

- Miscellaneous. Fixed minor typos and phrasings.

- Section 1.3. Reformulated the text to clarify that overfitting is not

particularly related to noise, but to any properties specific to the

training set, as it is the case on the Figure 1.2.

- Section 3.2. Clarified the phrasing and changed the

Figure 3.1.

- Section 3.4. Fixed the indexing of the mappings in the example of a

composition.

- Section 3.7. Fixed the label "1TWh" in Figure 3.7, that should be

"1GWh".

- Section 4.5. Added a figure to illustrate the functioning of 2D

dropout.

- Section 4.6. Changed the Figure 4.8 so that in the top

part illustrating the re-scaling / translating after

normalization, the highlighted sub-blocks correspond to groups

of activations that are re-scaled / translated with the same

factor / bias.

- Section 6.6. Restricted the Figure 6.4. to three

sub-images to make the text more legible.

- Section 7.1. Added two paragraphs to introduce the

notion of Reinforcement Learning from Human Feedback.

- The missing bits. Removed the fine-tuning

sub-section, since most of it was moved to Section 7.1.